By Jim Dalglish

Director of UX Research & Strategy

How I use a custom version of ChatGPT to analyze interviews, synthesize survey data, and surface real insight — without losing the human touch.

If you've ever spent hours knee-deep in structure survey data or open-ended survey responses or scrolling through pages of interview transcripts, you know how overwhelming qualitative analysis can be. The process is often messy, nonlinear, and deeply human — which is exactly why I used to be hesitant to bring AI into the equation.

But then I built a custom GPT — a specialized version of ChatGPT that I trained to work the way I think. I call him Ulysses.

I named my chatbot entity Ulysses. Naming your bot may make you feel a little more collaborative.

SPECIAL NOTE: Throughout this article I will often refer to the chatbot I have created - and the steps you should take with yours - as Ulysses. When I say “upload to Ulysses,” I intend for you to upload to your own chatbot. I hope you don’t find it confusing!

Ulysses isn't just another chatbot. He’s a research partner. A strategic assistant that helps me spot patterns, synthesize insights, and get to the “aha” moments faster — without skipping the nuance and context that good research depends on.

In this post, I’ll walk you through how to create your own GPT like Ulysses and show you how I use mine to work through complex qualitative data — especially open-ended surveys, interview transcripts, and focus group summaries. This isn’t about replacing the human insight process. It’s about accelerating it — and making it more grounded, flexible, and (dare I say) enjoyable.

How to Set Up Your Own GPT Entity (Like Ulysses)

Start with privacy, then build your research partner. Before you start building your own GPT, there’s one non-negotiable rule you should know:

Do not upload sensitive or proprietary data into the free version of ChatGPT.

The free version (and even ChatGPT Plus, unless configured correctly) may use your data to train future models. If you’re working with real users — especially in healthcare, education, finance, or internal organizational research — you need to protect their privacy. Here’s how to set up a safe and effective GPT research assistant:

1. Use ChatGPT Plus or Enterprise

ChatGPT Plus (at $20/month) gives you access to GPT-4 and custom GPTs.

Enterprise gives you added privacy guarantees and full control over data handling.

If you're using ChatGPT Plus, go into Settings → Data Controls and turn off the option that allows your data to be used for training. This ensures your chats — and anything you upload — stay private.

2. Create Your Own GPT

Go to the ChatGPT interface and click on “Explore GPTs.”

Click “Create” to launch the GPT builder.

You’ll be prompted to describe what your GPT should do. This is where you train it — not by coding, but by telling it how you work.

For example, with Ulysses, I said:

“You are a research assistant that helps synthesize open-ended survey responses, interview transcripts, and focus group conversations. You prefer structured, thematic analysis. You ask clarifying questions when the context is unclear. You treat data with care and never guess beyond what’s given.”

You can also define tone, personality, strengths, and even upload example prompts. Once finished, give it a name (mine’s Ulysses) and publish it privately to your account.

3. Work Inside the Secure Instance

Once your bot is set up, use it only within that Plus or Enterprise environment. Upload your files directly into that chat and give it guidance like:

“Here’s a transcript of 10 interviews with prospective students.”

“Please analyze the themes and back them up with quotes.”

“Let me know if anything seems unclear or needs clarification.”

That’s your secure setup — now you’ve got a custom GPT designed to think the way you do. Next, let’s talk about how to bring it into a real research project and start generating meaningful insights.

Onboarding Your Bot Into Your Project

Give it the context it needs to think like you do.

Now that your GPT is set up, don’t just drop in a transcript or survey and hope for the best. Like any good collaborator, your chatbot needs context. It performs best when it understands:

What the project is about

Who the data came from

What you’re trying to figure out

What kind of deliverable you’re building toward

Think of this as your onboarding briefing — the quick download you'd give to a junior team member before asking them to analyze a dataset.

Here’s what I typically include up front:

1. Your Role

Let your chatbot know who you are in the process.

Examples:

“I’m a researcher synthesizing interviews from a usability study.”

“I’m a strategist building a messaging framework based on survey data.”

“I’m prepping a conference presentation on how alumni perceive the value of their degree.”

2. Project Goals

What’s the big picture? Why are you doing this research in the first place?

Be specific. The more your bot understands the strategic context, the more aligned the insights will be.

Examples:

“The goal is to identify emotional drivers behind enrollment decisions for adult learners.”

“We're looking for messaging gaps between prospective students and institutional staff.”

“I need to understand what factors lead admitted students to decline an offer.”

3. Upload Background Documents

What does your chatbot need to know?

Upload/share relevant background documents and research. Include the project’s RFP, kickoff documents, and any prior research that has been completed - including brand guidelines, personas, and journey maps.

4. Ask for Client/Project Background

What does your chatbot already know about your client or the project’s background?

You will want to take advantage of any knowledge your chatbot may already have at its disposal. You can also ask Odysseus to retrieve and summarize publicly available research, institutional updates, or media coverage related to the institution or the research project.

“Can you provide a summary of any recent news, strategic plans, or research findings related to [Institution Name] from publicly available sources?”

5. Audience and Data Type

What kind of data are you sharing? Where did it come from? Who participated?

Include details like:

Survey vs. interview vs. focus group

Number of responses

Audience type (students, staff, alumni, etc.)

Format (PDF, CSV, DOCX)

Examples

“This is a CSV of open-ended survey responses from 300 high school seniors who were accepted but didn’t enroll.”

“This PDF contains six interviews with faculty members across different departments.”

“These are anonymized transcripts from two focus groups with adult learners who completed their degree online.”

6. What You Need From Your Chatbot

Spell it out. What kind of analysis or support are you looking for?

Examples:

“Identify recurring themes and support them with direct quotes.”

“Help me compare perspectives from alumni and current students.”

“Summarize key findings in a format I can drop into a slide deck.”

Once you've given this background, you're ready to upload the data and start working together.

Prepping and Uploading Your Data

Because your bot can’t analyze what it can’t read.

My pal Ulysses is smart, but he’s not a magician. If your data is messy, mislabeled, or incomplete, your insights will be too. Before uploading anything, take a few minutes to prep your files. Think of it like setting the table before the conversation begins.

Here’s how I make sure the data is readable, structured, and ready for real analysis:

1. Clean Up Your Transcripts

Whether you’re uploading interview transcripts, focus group notes, or open-ended survey exports, make sure your documents are easy to follow.

Special Note:

If you use new AI transcript applications such as Fathom you will be able to avoid many of the following steps. Exporting from tools like Fathom Notetaker, Zoom, or Otter as a PDF typically results in clean, timestamped, speaker-labeled transcripts. I recommend that you invest in one of these new transcription tools. They will make your life much easier!

Do:

Clearly label each speaker (e.g., STUDENT, MODERATOR, ALUMNI 1)

Separate different interviews with headers (e.g., — Interview 3: Transfer Student —)

Remove filler like “uh,” “[inaudible],” or timestamps unless necessary

Double-check that participant roles or audience segments are noted

Don’t:

Upload one long, unlabeled transcript

Mix audiences without clarifying who’s who

Leave sensitive info like names, email addresses, or student IDs

Pro Tip:

Use PDFs or DOCX files when possible. They retain formatting, speaker labels, and section breaks better than plain text files — which can scramble structure and confuse the model.

2. Combine Strategically (When Needed)

Sometimes it's best to combine multiple interviews or responses into one document — especially if you're trying to spot patterns across participants.

Just be sure to:

Keep a clear structure (headings between sessions help)

Maintain consistent formatting and speaker identifiers

Let your bot know what you’ve done (e.g., “This file includes 10 interviews with admitted students who declined enrollment. Each section is labeled.”)

Combining helps your chatbot detect recurring language, themes, or points of divergence — which is harder to do if you upload documents one at a time.

3. Be Segment-Savvy

If your data includes distinct audience segments — like prospective students vs. current students, or faculty vs. staff — make that explicit.

You can do this in one of two ways:

Within the data itself: Add a column, label, or heading (e.g., “Segment: Transfer Student”)

As a note to your bot: Tell it who each group is and how they’re identified

Example input:

“This spreadsheet includes responses from three groups: Current Students, Alumni, and Faculty. Each row includes a ‘Segment’ column. Please compare how each group discusses sense of belonging.”

4. Confirm File Coverage

For longer documents, it’s always worth checking that your chatbot read the whole thing.

After uploading, I often prompt with:

“Can you confirm that you’ve read the entire file? Were there any parts (especially toward the end) that weren’t processed or cut off?”

Better safe than sorry — especially when insights live in the margins.

5. Respect Privacy — Always

This should go without saying, but: Never upload files that contain personally identifiable information (PII) — names, emails, phone numbers, IDs, etc.

If you’re working with sensitive content, anonymize first. Use roles or pseudonyms instead of real names, and double-check your files before uploading.

Once your data is clean, clear, and well-labeled, Ulysses - or whatever you call your bot - is ready to dive in.

How to Analyze Data with Your Bot

This is where the magic happens — with your guidance.

Once you’ve uploaded your dataset and given your chat bot the right context, you’re ready to start analyzing. But remember: this isn’t a one-click “analyze my data” moment. The best insights don’t come from a single command — they come from dialogue.

Think of your bot as a junior strategist sitting beside you. It needs your prompts, your curiosity, and your feedback to do its best work. Here’s how I work through analysis in a way that’s both efficient and grounded.

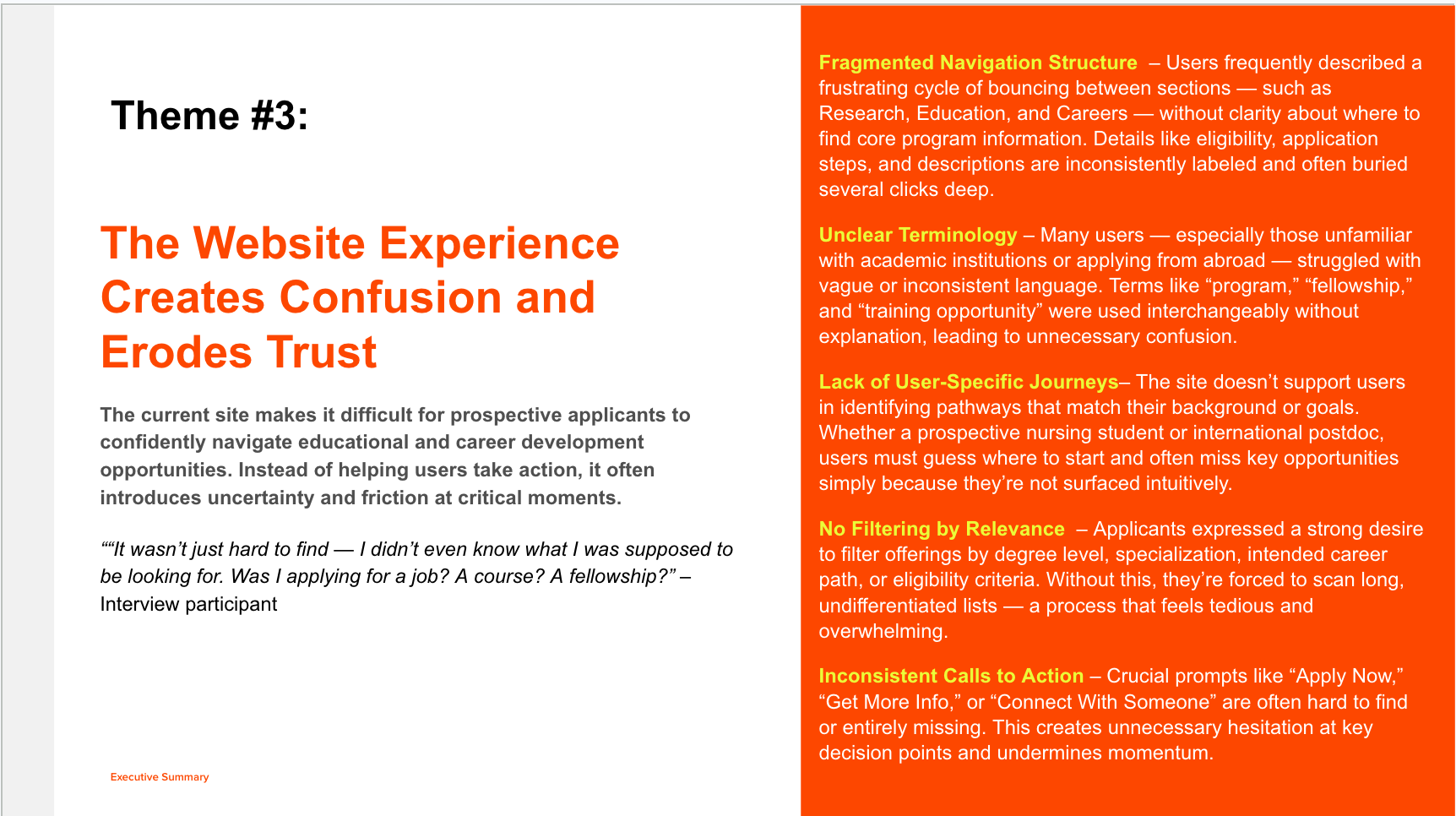

Start with a High-Level Thematic Summary

I usually began by asking Ulysses to scan the full dataset and surface key patterns.

Example prompt:

“What are the top 4–5 themes that emerge across this data? Please include a short summary for each theme and back it up with representative quotes.”

You’re looking for repeated ideas, common phrases, tensions, surprises — anything that feels like it carries meaning. Your bot will flag what it sees and offer summaries. From there, you can go deeper.

Then Drill Down by Theme, Question, or Segment

Once you’ve got a set of high-level themes, pick one and explore it more closely.

Sample prompts:

“Let’s focus on the theme of ‘belonging.’ How did different audience types describe it?”

“Can you compare how alumni and current students talked about affordability?”

“Did any participants express conflicting views on this topic? Show me the range.”

Ulysses was a great help to me at pattern recognition, but it’s your job to ask the questions that tease out nuance, contrast, and insight.

Ask for Evidence — Always

Never settle for vague summaries. Push your bot to support everything with quotes, examples, or direct responses.

Prompt:

“For each theme, include at least one quote or response that supports it. Prefer quotes that are clear, compelling, and reflect the tone of that group.”

This is especially helpful if you're preparing a deck, messaging platform, or audience brief. A good quote can do more than a dozen bullet points.

Use the “Universal Theme Template”

When I am building presentations or writing strategy briefs, I often ask Ulysses to structure findings in a repeatable format:

Theme Name

Summary: 2–3 sentence explanation

Frequency Rating: (e.g., ⭐⭐⭐⭐☆ = widely mentioned)

Evidence: A quote or response that brings the theme to life

Strategic Implication (optional): Why this matters

You can prompt for this directly:

“Use the Universal Theme Template to summarize the key findings from this data.”

It gives you something clean, consistent, and easy to repurpose in a deck or doc.

Compare and Contrast Segments

If your data includes multiple groups, audience types, or experiences — use your bot to surface the differences.

Try prompts like:

“Compare how faculty vs. staff described institutional communication.”

“Did adult learners emphasize different priorities than traditional undergrads?”

“Which themes were unique to those who declined to enroll?”

Segment-level insight is often where strategy takes shape — and where you find the seeds of differentiation.

Turning Dialogue Into Insight

Don’t treat your chatbot like a report generator — treat it like a thought partner.

One of the biggest mistakes I see people make with AI tools like ChatGPT is stopping after the first summary. But insight doesn’t usually live in a list of bullet points. It lives in the back-and-forth — the places where you push, reframe, question, and dig deeper.

That’s where my bot Ulysses shines.

The first analysis your bot gives you? Think of it as a starting draft. From there, the conversation begins. Here’s how I work with it to move from raw findings to meaningful insight:

Ask It to Clarify or Refine Themes

Once Ulysses surfaces themes, I don’t just nod and move on. I challenge them.

Try:

“Can you break this theme into smaller sub-themes?”

“Is this really one theme or two different ideas grouped together?”

“What did this group say that contradicts this theme?”

Often, the second or third version of a theme is sharper, more nuanced, and more strategically useful than the first.

Explore Hypotheses

Use your chatbot to test your hunches and chase emerging ideas.

Prompts like:

“I suspect affordability concerns are stronger among first-gen students. Can you check that?”

“Are students who mentioned career readiness also more likely to talk about mentorship?”

“Does this theme show up more in responses from women than men?”

This is where you shift from summarizing what people said to understanding why they said it — and what that means for your work.

Look for Outliers and Tensions

Sometimes the most useful insight is what doesn’t fit the pattern.

Ask:

“Are there responses that pushed back on this idea?”

“Was there a minority perspective that stood out?”

“Are there any surprising quotes that might reshape how we interpret this?”

Insight often comes from friction — so make space for it.

Reframe for Strategy or Messaging

Once you’ve worked through the data and refined the themes, you can ask your bot to shift gears.

Prompts like:

“Can you reframe these findings as messaging pillars?”

“Write these insights as 3 slide-ready bullets, each with a headline takeaway.”

“Summarize these results for a skeptical VP of Marketing.”

You’re not just generating findings. You’re translating them into something usable, persuasive, and aligned with your goals.

Always Stay Grounded in the Data

As you iterate and reframe, remind your bot to stay anchored in what was actually said.

Example:

“As we explore messaging ideas, keep using only the data from the uploaded transcripts — no assumptions beyond that.”

AI tools can be confident guessers. That’s not what we want here. We want precision, integrity, and trust in the insight.

Validating Your Chatbot’s Work

Before you hit “copy/paste,” make sure the insights hold up.

As powerful as Ulysses is, it’s not infallible. It’s trained to analyze, summarize, and support — but ultimately, you are the strategist. And like with any junior team member, it’s your job to double-check the work before it goes out the door.

Here’s how I review, validate, and polish Ulysses’s output before using it in a deliverable:

1. Verify the Quotes

Ulysses will often summarize a theme and include a quote — but it’s always worth checking that the quote actually exists in the source material, and that it says what Ulysses claims it does.

Best practice:

Copy/paste the quote into your original file and search for it.

Confirm it hasn’t been subtly rewritten or taken out of context.

If a quote feels off, ask:

“Can you show me the exact source for this quote again?”

2. Check for Overweighting

Sometimes a single powerful response shows up again and again — making it seem more representative than it actually is.

Quick test:

Look for repeated quotes across multiple themes.

Ask: “Was this sentiment shared broadly, or just by one or two respondents?”

If one voice is dominating, it might need to be reframed as an outlier, not a core theme.

3. Confirm Full Document Coverage

Especially with longer uploads, GPT models can sometimes front-load their attention — focusing more on what’s at the beginning of a document.

Prompt to use:

“Please confirm that the entire file was processed. Were there any themes or perspectives that emerged more strongly in the second half of the data?”

This helps ensure the full picture is reflected — not just the early pages.

4. Look for Missing or Underdeveloped Themes

Just because something doesn’t show up in the summary doesn’t mean it wasn’t in the data. If a key concept you expected to see is missing, ask about it directly.

Prompt:

“Did anyone talk about career services or job placement? If so, how frequently — and what did they say?”

Sometimes important themes are quieter or less frequent — but that doesn’t make them irrelevant. Especially if they tie to your strategic goals.

5. Validate Segment Representation

If your dataset includes multiple audience types, double-check that each group was heard.

Prompt:

“Can you summarize how each segment — for example, alumni, current students, and staff — discussed this theme? Did any group have a notably different perspective?”

This is especially helpful when your output is feeding into personas, messaging platforms, or segmented briefs.

6. Tone-Check the Summary

Occasionally, if your chatbot is like Ulysses, it may over-emphasize either positive or negative language, especially if strong quotes skew the tone.

Ask:

“What was the overall tone of responses to this question? Were there any emotional outliers that might be distorting the summary?”

This helps you avoid overgeneralizing — or overreacting — to particularly charged responses.

Final reminder:

Treat your bot similarly to the way I treat Ulysses - like a sharp but junior team member. Trust its patterns. Question its assumptions. And make the final call yourself.

Reframing Insights for Presentations and Deliverables

Because great insights deserve great formatting.

Once you’ve validated your bot’s work, it’s time to do something with it. Whether you're building a slide deck, drafting a messaging framework, writing a report, or prepping for a stakeholder meeting, your insights need to be clear, concise, and audience-ready.

Here’s how I collaborate with Ulysses to shape findings for different formats:

1. Create Slide-Ready Bullets

Your chatbot can rewrite theme summaries in concise, high-impact language — ideal for strategy decks or insight presentations.

Prompt:

“Rewrite these five themes as slide bullets. Use bold text for key takeaways. Keep each point to 1–2 sentences max.”

Bonus: You can also ask it to suggest slide titles, opening lines, or visual frameworks to reinforce the message.

2. Build Comparison Tables

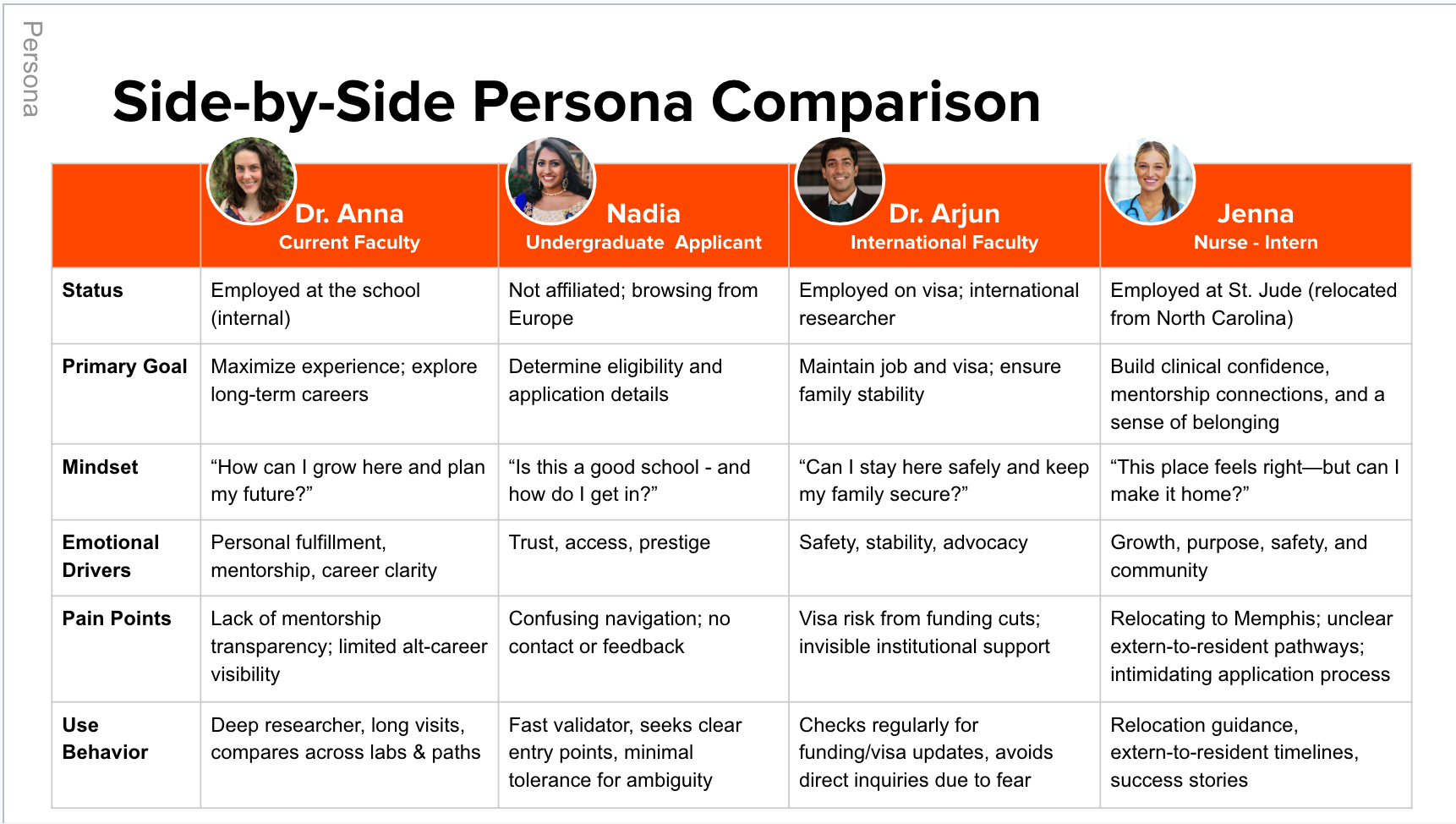

If your research includes multiple audience groups or segments, tables can be an excellent way to communicate differences at a glance.

Prompt:

“Please create a table comparing how prospective students, alumni, and faculty talked about the campus experience. Include a theme, frequency rating, and sample quote for each group.”

This structure works well for:

Personas

Journey mapping

Messaging differentiation

Cross-segment strategy development

3. Reframe by Use Case

Sometimes you need to express the same insight in multiple ways, depending on the audience.

Prompt:

“Reframe this theme three ways: (1) for a research report, (2) for a slide deck, and (3) for messaging development.”

This gives you flexible language for whatever you're building — without losing the core idea.

4. Boil It Down for Execs

Let’s be honest: not every stakeholder wants the nuance. When you need something punchy and to the point:

Prompt:

“Summarize this set of findings in three strategic takeaways suitable for a VP audience. Each should be short, clear, and actionable.”

Or:

“Turn this theme into a one-sentence headline insight I can use on a slide.”

The goal: less explanation, more clarity.

5. Align Tone to the Moment

Sometimes I want the voice to feel consultative and strategic. Other times, more neutral and report-like. You can ask your chatbot to adjust its tone.

Prompt:

“Rewrite these findings in a consultative tone for a leadership presentation.”

Or:

“Make this language more formal and appropriate for a published research report.”

Bottom line: my pal Ulysses doesn’t just help me analyze the data — it helps me present it better. It’s like having a strategist and a slide whisperer in one.

Final Thoughts: Let Your Chatbot Handle the Repetition — You Handle the Insight

This entire workflow is designed to help you get to better insights faster — without burning out on the tedious parts. Your new chatbot isn’t here to replace your judgment, voice, or human intuition. It’s here to support them.

Treat it like a partner. Feed it context. Push back when needed. And most importantly — stay grounded in the data.

Because the future of research isn’t human or AI. It’s collaborative.

Let’s Keep the Conversation Going

If you’ve made it this far — thank you. My hope is that my experiences with Ulysses give you a model for what’s possible when research and AI work hand in hand, without losing the human spark that makes insight meaningful in the first place.

If you’re experimenting with your own GPT, curious about qualitative analysis workflows, or just wondering where to start — I’d love to hear from you. Shoot me a note, share your experience, or ask a question. No AI required for that part.

You can reach me at jimdalglish@mac.com or connect with me on LInkedIn.

Jim Dalglish, Director of UX Research & Strategy

About the Author

Jim Dalglish is a strategist and researcher who helps organizations turn complexity into clarity. He specializes in qualitative insight, user experience, and the responsible use of AI in research. He’s worked across sectors — from education and healthcare to SaaS and the arts — and is passionate about making data more human.

When he’s not building GPTs or synthesizing interviews, you’ll probably find him writing plays, sailing his sailboat, or walking the Cape Cod coastline.